|

Andriy Sarabakha Assistant Professor (Tenure Track) Department of Electrical and Computer Engineering Aarhus University Denmark |

|

Andriy Sarabakha Assistant Professor (Tenure Track) Department of Electrical and Computer Engineering Aarhus University Denmark |

Drone racing is a recreational sport in which the goal is to pass through a sequence of gates in a minimum amount of time while avoiding collisions with the environment. In autonomous drone racing, one must accomplish this task by flying fully autonomously in an unknown environment, relying only on the on-board resources. Thus, autonomous drone racing is an exciting case study that aims to motivate more researchers to develop innovative ways of solving complex problems. What makes drone racing such an interesting challenge is the cumulative complexity of each sub-problem to be solved, such as perception, localisation, path planning and control.

The Autonomous Drone Racing Competition @ IEEE SSCI 2025 is an aerial robotics challenge in which research groups will validate their fuzzy logic controllers in a challenging scenario – autonomous drone racing.

The participating groups will have the possibility to address two major challenges in autonomous drone racing:

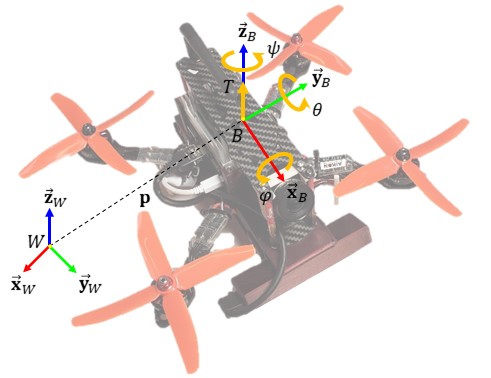

This challenge aims to design intelligent controllers that can operate in nonlinear regions of the drone's dynamical model at high speeds. The number and pose (position and orientation) of the gates will be predefined, and the drone will have to cross them in a given sequence. For the gate detection, an on-board wide-angle camera with a 90° field-of-view is used. Trajectories at fixed velocities through the centres of all gates will be generated using the curve fitting method and available to the participants. However, each team is free to implement its own trajectory generation strategy to achieve better control performances. The real-time noisy localisation, which includes estimated position and attitude, will be available to simulate visual-inertial odometry which deteriorates its performances at high speeds due to motion blur and accelerometer's noise. The task of each team is to develop a fuzzy logic controller which will take the desired trajectory and current pose of the drone, as input, and will provide the commanded attitude and thrust, as control commands, as depicted in Figure 1.

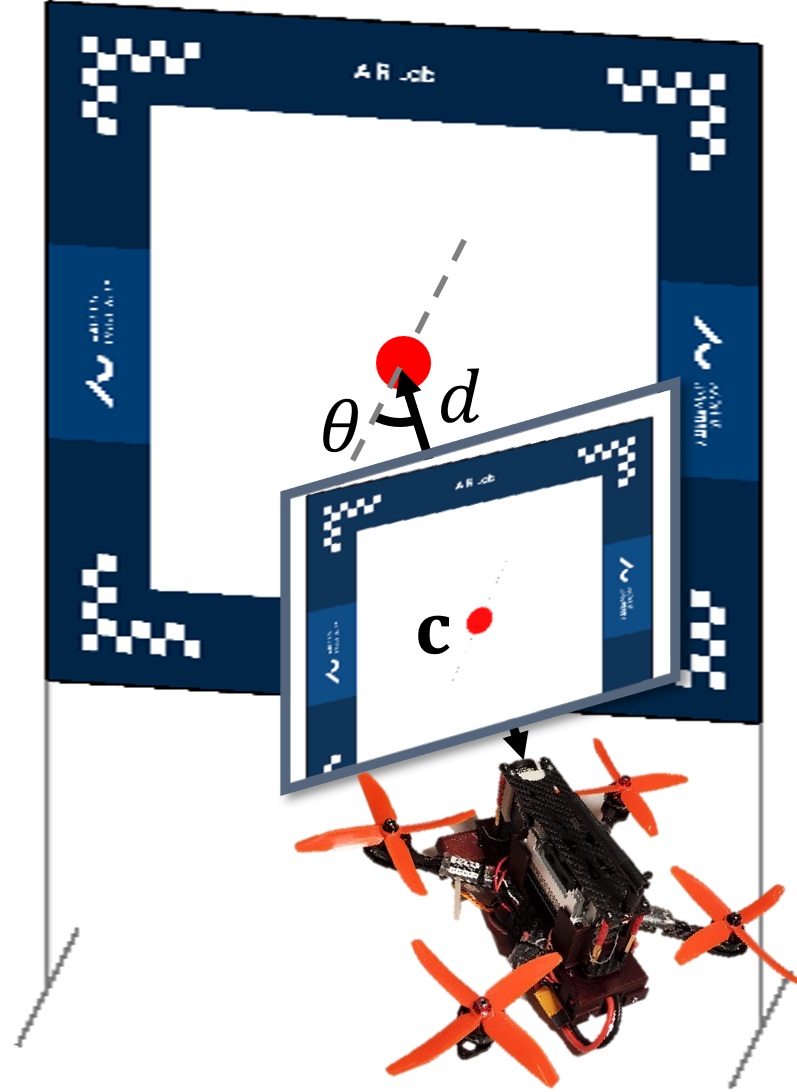

In this challenge, the task is to design 3D perception methods that can accurately estimate the location of a gate in Figure 2 relative to a global frame under various lighting conditions and motion blur due to the drone's high speeds. The detection consists in determining the x and y position of the gate's center c, distance d and orientation θ of the gate relative to the drone’s body frame. Participants are provided with a limited training dataset consisting of gate images from the drone and the associated ground-truth poses of gates and the drone in a global frame. They will be required to create a correct map of the gates in the testing dataset, given only the ground-truth pose of the drone.

The simulation environment of the racing track with racing gates is implemented in MATLAB and tested in MATLAB R2020b. A sample environment is shown in Figure 3. The latest version of the simulation environment can be downloaded from GitHub repository. The simulation package contains five files:

Please note that only the content of controller.m and trajectory.m can be modified.

Remark. The pose of the gates during the competition will be different than in the scenario provided to the teams to develop their controllers.

Remark. For any technical issues with the simulation environment, please open a new issue on GitHub or send an email enquiry to the Organisers.

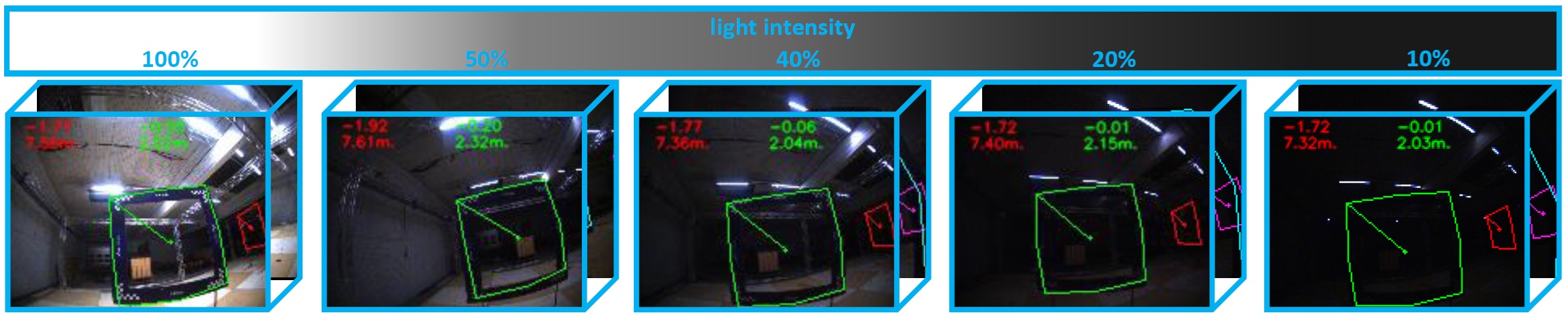

For this challenge, both a training dataset, illustrated in Figure 4, and a testing dataset will be provided. The datasets include drone images in PNG format and a JSON file containing the ground-truth information of the drone's pose. For the training data, the ground-truth information of the gates is also provided.

The Evaluation Committee will evaluate the developed controllers on the testing racing track and gates' predictions. The teams will be notified of the acceptance of their results on January 20, 2025. The results will be announced during IEEE SSCI 2025 in March 2025.

The controllers will be evaluated using a similar MATLAB environment, but the position of the gates will be different. The main prerequisite is the real-time implementation of the controller, in other words, the controller must run minimum at 100Hz. Each team will be given 60 seconds to cross as many gates as possible in a predefined sequence. The evaluation rules will be as follows:

Participants will be ranked based on the average accuracy of their perception results compared to the ground-truth information of the gates in the test dataset.

The teams must submit the following MATLAB files: